Advertisement

Constant integration and deployment made possible by DevOps have transformed software delivery. Still, machine learning travels a different road. Combining machine learning with DevOps demands a thorough reorganization of teams and systems. Many companies struggle to apply DevOps techniques to ML initiatives. The secret is to recognize both disciplines and modify processes in line with each other.

Data collecting, model training, and evaluation cycles are not linear. Restructure DevOps to let ML fit these loops. Integrating DevOps machine learning calls for flexibility, automation, and teamwork. Without a customized approach, deployments stall, and models underperform. Teams have to become flexible. Aligning infrastructure, pipelines, and objectives will help maximize MLOps processes. A good merge calls for a basic conceptual change rather than only tools.

DevOps is mostly about consistent settings, automation, and fast delivery. Machine learning proceeds exploratively, guided by data. Whereas ML is always experimenting, software engineering seeks stability. When merging processes, these opposing objectives cause friction. New issues arise in versioning models, managing data changes, and validating outcomes. Model outputs lack deterministic character. Even with the same code, training might produce diverse results. DevOps tools are not designed to manage this flux.

Furthermore, ML initiatives call for big datasets, which complicate access control and storage. Monitoring ML systems calls for several criteria, including model correctness and drift detection. Teams try to mix practices without realizing these variations exist. Good cooperation between engineers and data scientists is absolutely crucial. ML teams have to know infrastructure demands, and DevOps engineers have to learn ML techniques. Understanding this gap helps direct the reorganization process. First, integration is addressing these differences.

DevOps pipelines from the past create code, run tests, and deploy rapidly. Pipelines in machine learning call for more stages. Included are data ingesting, preprocessing, model training, evaluation, and versioning. Incorporating these phases can help DevOps for ML restructure. Automation gets increasingly intricate. A small data modification can trigger model retraining. Pipelines track model versions and data lineage. Tools like MLflow and Kubeflow enable control of this complexity. ML differs in integration testing.

Pipeline validation of model performance replaces unit tests. Part of the pipeline logic is thresholds for recall, accuracy, and precision. Metrics influence deployment decisions. Underperformance in pipelines also requires rollback plans. Continuous ML deployment calls for ongoing production result monitoring. Feedback loops have to set off retraining cycles. Including these components in pipeline design helps maximize MLOps processes. Building modular, reusable pipeline parts lowers maintenance. Careful design allows ML pipelines to match DevOps speed without compromising model quality.

Success calls for much more than just tools. The structure of organizations has to change. Combining DevOps with machine learning results in skill set mixing. Often working alone are data scientists. Engineers mostly pay attention to deployment. Establish shared responsibility in cross-functional teams. Everybody should grasp the whole end-to-end process. Teams that have aligned on goals enhance their communication. Integration of DevOps machine learning calls for a common culture.

Promote openness in experimentation. Share ideas and record outcomes. Use consistent check-ins to line up development. Give both groups training. Support tools and ML model understanding for engineers. Share infrastructure essentials with data scientists. To work together, teams have to trust one another. Break through silos impeding advancement. Dashboards and shared data help to foster responsibility. Celebrate successes together and assess collective failings. Use people first to restructure DevOps for ML. Technical achievement follows from cultural alignment. Even the best tools will not produce outcomes without cooperation. Create a team that grows together, learns, and adapts.

Machine learning requires vast amounts of data. Training models consume TPU or GPU capacity. Manual infrastructure scaling wastes time. Using Terraform and Kubernetes, automate resource provision. For portability, consider containerizing. ML models demand consistency across environments. Containers guarantee compatibility in manufacturing and development. Control compute using auto-scaling rules. Reduce costs using on-demand clusters or spot instances. Storage also needs to scale. Versioned datasets increase rapidly. Control expenses using object storage with lifetime policies. Automate logs and backups.

Track consumption to clear congestion. Monitoring instruments support the tracking of infrastructure conditions. Notify teams of outages or performance declines. Infrastructure-as-Code makes repeatable configurations possible. Standardize surroundings for model repeatability. Automate infrastructure from the beginning to maximize MLOps processes. Test all changes before applying them through CI/CD pipelines for infrastructure. Secure every component of your infrastructure. Data leaks undermine confidence. Control secrets for access credentials. Scalable MLOps depend mostly on infrastructure. Create it transparent, strong, and flexible.

Deploying a model is not the end. Systems driven by machine learning change and constant monitoring guarantee their continued efficacy. Track performance above system uptime. Track model drift, user interaction, and predictions. Over time, a model may lose accuracy. Behavior changes with changing data. Track prediction confidence and data input distribution. Notify when measurements surpass criteria. Models get better in part through feedback loops. Retrain models with real-time comments. Track user errors or behavior. When at all feasible, automate these retraining sessions.

Track model performance geographically or by cohort to identify where and why it drops. Plot trends using dashboards. Logged forecasts and results make root cause analysis possible. Organize DevOps for Machine Learning with an eye toward observability. Combine Prometheus's monitoring capabilities with ML-specific platforms. Promote an attitude of feedback. Share knowledge across teams. For speedier reactions, combine notifications with messaging tools. Monitoring is fundamental in a sustained ML system; it is not an afterthought.

Combining machine intelligence with DevOps has enormous promise but calls for careful reorganizing. Success requires changing processes, team alignment, and infrastructure automation. Embracing flexible pipelines, regular feedback, and a collaborative culture can help you restructure DevOps for ML. Integration of DevOps machine learning goes beyond tool merging to include combining people and processes. Optimize MLOps processes to guarantee models are scalable, dependable, and flexible. Though the outcomes can be great, transformation takes time. Deliver faster and better models. Welcome change and maximize this developing field.

Advertisement

Learn about essential AI skills for network professionals to boost careers, improve efficiency, and stay future-ready in tech

Discover how AI, NLP, and voice tech are transforming chatbots into smarter, personalized, and trusted everyday partners

Perplexity AI is an AI-powered search tool that gives clear, smart answers instead of just links. Here’s why it might be better than Google for your everyday questions

Curious about what really happens when you run a program? Find out how compilers and interpreters work behind the scenes and why it matters for developers

Nvidia stock is soaring thanks to rising AI demand. Learn why Nvidia leads the AI market and what this means for investors

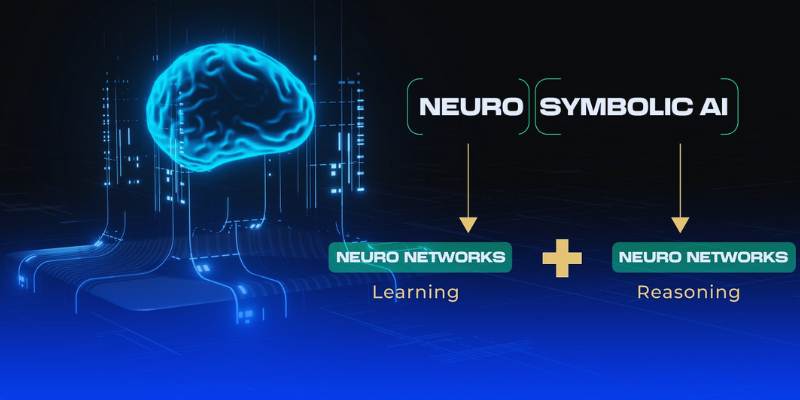

Neuro-symbolic AI blends neural learning and symbolic reasoning to create smarter, adaptable systems for a more efficient future

Discover why employee AI readiness is fairly low, and explore barriers and strategies to boost workplace AI skill development

Find out why Claude 3.5 Sonnet feels faster, clearer, and more human than other AI models. A refreshing upgrade for writing, coding, and creative work

Understand how global AI adoption and regulation are shaping its future, balancing innovation with ethical considerations

Ever needed fast analytics without heavy setups? DuckDB makes it easy to query files like CSVs and Parquet directly, right from your laptop or app.

Frustrated with messy data workflows? Learn how dbt brings structure, testing, and version control to your data pipeline without adding extra complexity

Explore edge computing in autonomous vehicles and real-time data in coffee shops powering smart edge technology applications