Advertisement

Working with data can sometimes feel like chasing something that's always a step ahead. One minute, your reports look fine, and the next, someone points out a missing number or a wrong calculation. If you've been there, you know how frustrating it can be. Not only does it create trust issues, but it also drains time and energy trying to trace what went wrong.

This is where dbt, or Data Build Tool, steps in. It doesn't magically fix your problems, but it gives you a clear, practical way to handle your data pipeline so things stop falling through the cracks. Instead of relying on scattered spreadsheets or fragile scripts, dbt offers a structured, transparent way to transform and manage data — making life easier for data teams and decision-makers alike.

When you think about moving data around — from collection to reporting — there's a lot that can go wrong. Raw data usually needs some cleaning. Transformations are needed to make sense to the teams who need them. Without a good system, errors creep in and trust in the data falls apart. dbt is designed to fix that.

At its core, dbt lets you transform your raw data into something clean and reliable right inside your data warehouse. You write models using simple SQL select statements. Then, dbt handles building those models, managing dependencies, and even documenting what you did — without making you jump through hoops.

Another big win with dbt is version control. When you use dbt, your transformations live alongside your codebase. This means changes are trackable, testable, and reviewable, just like software development. The result? Your data pipeline stops being a black box and starts feeling more like a well-oiled machine.

You might wonder, what exactly about dbt makes it stand out? Here are a few features that users quickly fall in love with:

Model Building: In dbt, a "model" is just a SQL file that defines a transformation. You keep models lightweight, readable, and easy to understand. If you need to build complex logic, you do it by stacking small models on top of each other, not by writing massive, unreadable SQL scripts.

Testing: With dbt, you can define tests to make sure your data stays accurate. For example, you can easily check if a column should never be null or if certain values are always unique. These tests run automatically when you build your models.

Documentation: dbt auto-generates documentation based on your models and tests. It even creates a lineage graph showing how data flows from one table to the next. This turns documentation from a painful chore into a useful resource.

Environment Management: dbt makes it easy to create development, staging, and production environments. This keeps your experiments and tests away from live data, preventing accidents before they happen.

If you're used to traditional ETL (Extract, Transform, Load) processes, dbt can feel like a shift — and in a good way. Instead of pulling data out, transforming it, and then loading it back in, dbt sticks to the ELT pattern: Extract and Load first, then Transform inside the warehouse.

This small change brings a few big benefits:

Performance Boost: Modern warehouses like Snowflake, BigQuery, and Redshift are built to handle transformations at scale. Keeping the work inside the warehouse speeds things up.

Transparency: When you transform inside the warehouse, every step is visible. You can inspect tables and logs directly without guessing where something went wrong.

Flexibility: Adding a new model or tweaking an existing one doesn’t mean breaking the whole pipeline. In dbt, models are modular, and their relationships are clear. It’s much easier to update and maintain.

Think of dbt as a tool that helps you build your data house brick by brick, with each brick labeled, tracked, and stored neatly in place.

Using dbt isn't complicated, but a few simple habits can make your experience smoother:

Start Small: When first setting up dbt, avoid trying to refactor everything at once. Pick a few important models, set up your tests, and gradually expand.

Write Tests Early: It’s tempting to think you’ll add tests later, but they’re easiest to set up when you’re writing models for the first time.

Use Descriptive Naming: In dbt, models are stored as files, and those file names turn into table names in your warehouse. Clear naming keeps things readable for you and everyone else.

Document Along the Way: It’s much easier to add descriptions to models and columns as you write them than to circle back months later.

Leverage Macros and Packages: dbt supports reusable SQL snippets called macros and a whole ecosystem of community-built packages. These can save you time and help you follow best practices without starting from scratch.

Schedule Regular Runs: Set up automated schedules to rebuild your models regularly. This helps catch issues early, keeps your data fresh, and avoids last-minute surprises when stakeholders need reports.

dbt is more than just another tool in the data stack; it’s a smarter system for organizing, transforming, and securing your data flows. It brings structure without being rigid and makes best practices feel like the natural way to work. If you’ve ever felt like your data projects were running in circles or getting lost in endless fixes, dbt might just be the thing that brings some real order to your workflow. It helps you move faster, with more confidence, and with fewer late-night bug hunts. Setting it up takes a little bit of upfront effort, but the payoff is a pipeline that's clear, reliable, and easier to maintain over time — a solid foundation you can build on as your data needs grow.

Advertisement

Discover ChatGPT AI search engine and OpenAI search engine features for smarter, faster, and clearer online search results

Discover how GLUE and SQuAD benchmarks guide developers in evaluating and improving NLP models for real-world applications

Discover how AI transforms financial services by enhancing fraud detection, automating tasks, and improving decision-making

Know explainable AI techniques like LIME, SHAP, PDPs, and attention mechanisms to enhance transparency in machine learning models

Perplexity AI is an AI-powered search tool that gives clear, smart answers instead of just links. Here’s why it might be better than Google for your everyday questions

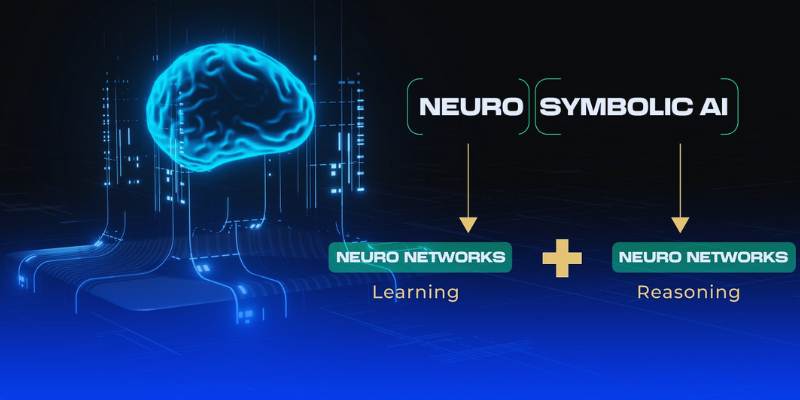

Neuro-symbolic AI blends neural learning and symbolic reasoning to create smarter, adaptable systems for a more efficient future

Still puzzled by self in Python classes? Learn how self connects objects to their attributes and methods, and why it’s a key part of writing clean code

IoT and machine learning integration drive predictive analytics, real-time data insights, optimized operations, and cost savings

Discover how AI, NLP, and voice tech are transforming chatbots into smarter, personalized, and trusted everyday partners

Learn how Automated Machine Learning is transforming the insurance industry with improved efficiency, accuracy, and cost savings

Nvidia stock is soaring thanks to rising AI demand. Learn why Nvidia leads the AI market and what this means for investors

AI transforms sales with dynamic pricing, targeted marketing, personalization, inventory management, and customer support