Advertisement

In the fast-developing field of artificial intelligence (AI), knowledge of how machine learning models decide is absolutely vital. Ensuring openness and credibility becomes increasingly crucial as AI systems grow more complicated. Explainable artificial intelligence (XAI) is then really helpful. XAI aims to simplify machine learning models so that people may comprehend their foundation for prediction.

Clear explanations of AI decisions help build confidence. It improves decision-making in fields like healthcare, finance, and others. Four main explainable artificial intelligence methods—LIME, SHAP, partial dependence plots (PDPs), and attention mechanisms—will be discussed in this article. These methods provide insightful analysis of how intricate models operate, therefore enabling users to trust and apply artificial intelligence technology more easily.

Below are the four explainable AI techniques that help make machine learning models more transparent and easier to interpret.

Emphasizing individual predictions, LIME is a method that clarifies machine learning models. It generates interpretable surrogate models that replicate the local region of a given data point's complex model behavior. It helps to clarify the black-box character of the model and provides an understanding of why it projected that certain result for that particular case. LIME operates by somewhat perturbing the input data and tracking how these variations impact the output of the model. It approximates the complex model's behavior for that example. It is done by training a simple, interpretable model, like linear regression, using the observations. LIME has one benefit in that it may operate with any machine learning model—including deep learning networks. LIME does, however, have a drawback in that it generates several instances of the data; hence, it calls for more computational resources—especially for big datasets.

Another well-known explainable artificial intelligence method is SHAP. Based on game theory, it seeks to calculate the contribution of every feature to a particular prediction, thereby clarifying the output of any machine learning model. By fairly allocating the prediction among all the input features, SHAP values ascertain how much each feature has affected the outcome. SHAP presents interpretability both locally and globally. Locally, it clarifies personal predictions; globally, it offers an understanding of feature importance over the whole dataset. SHAP's main advantages are that it offers consistent, accurate, easily understood explanations and is mathematically sound. Though it has advantages, SHAP can be computationally costly—especially for intricate models or datasets that include many attributes. Still, XAI has made great use of it since it offers explicit explanations.

In machine learning models, partial dependence plots (PDPs) provide still another way to graph the relationship between a feature and the target variable. PDPs show how, despite holding all other parameters constant, the expected outcome changes as a single feature is modified. This method helps one to grasp how particular characteristics affect the predictions of the model. In a model estimating housing prices, a PDP shows how changes in house size affect the price. It keeps other variables, like location or number of bedrooms, constant. It lets users observe how much a given function affects the forecast at last. PDPs have several benefits, mostly related to their simplicity in interpretation and clear insights into model behavior. Still, their efficacy depends on somewhat basic correlations between characteristics and predictions. With very complicated, non-linear models where interactions between features play a major role, PDPs could not be as effective.

Deep learning makes extensive use of attention mechanisms, notably in tasks involving computer vision and natural language processing (NLP). These methods enable models to make predictions with an eye toward the most crucial elements of the incoming data. By stressing pertinent data points or attributes, attention mechanisms offer insightful analysis of how the model is deciding what to do. In natural language processing, attention processes indicate for a model which words or phrases in a sentence are most crucial for prediction. In computer vision, attention can expose which parts of an image the model needs to identify properly. The main benefit of attention mechanisms is that they provide interpretable findings by pointing out the salient characteristics affecting the decision-making process of the model. Nonetheless, attention models can be complicated and require a thorough knowledge of the structure of the model to grasp well.

The type of model, data complexity, and application requirements all influence the choice of XAI technique. These factors determine the most suitable approach for explainable artificial intelligence. Techniques like Partial Dependence Plots (PDPs) can clearly show feature importance if you are using a straightforward, interpretable model like linear regression or decision trees. Methods like LIME or SHAP are more appropriate, nevertheless, for sophisticated models like deep learning or ensemble techniques. When you require local reasons for particular predictions, LIME is useful; SHAP offers more consistent and mathematically solid global explanations. Attention mechanisms might be the ideal choice if you handle sequential data or NLP tasks since they indicate which aspects of the data most affect the decision of the model. Given the trade-offs in computing cost and complexity, ultimately, interpretability must be balanced with model correctness. The correct approach guarantees that your model is transparent and efficient.

Explainable artificial intelligence (XAI) approaches help to increase trust in machine learning models by means of clarity. Techniques include LIME, SHAP, partial dependency graphs (PDRs), and attention systems that shed important light on model decision-making. Every method has advantages and fits particular models and application situations. Choosing the correct XAI technique guarantees that your machine learning models are accurate and interpretable, hence improving their dependability and availability. Explainability will be essential to guarantee the ethical, responsible, and efficient use of artificial intelligence as it keeps changing several sectors.

Advertisement

Learn about essential AI skills for network professionals to boost careers, improve efficiency, and stay future-ready in tech

Discover how AI in the NOC is transforming network operations with smarter monitoring, automation, and stronger security

Restructure DevOps for ML models and DevOps machine learning integration practices to optimize MLOps workflows end-to-end

Still puzzled by self in Python classes? Learn how self connects objects to their attributes and methods, and why it’s a key part of writing clean code

Discover the 6 common ways to use the SQL BETWEEN operator, from filtering numbers and dates to handling calculations and exclusions. Improve your SQL queries with these simple tips!

Compare GPUs, TPUs, and NPUs to find the best processors for ML, AI hardware for deep learning, and real-time AI inference chips

IoT and machine learning integration drive predictive analytics, real-time data insights, optimized operations, and cost savings

Perplexity AI is an AI-powered search tool that gives clear, smart answers instead of just links. Here’s why it might be better than Google for your everyday questions

Discover how GLUE and SQuAD benchmarks guide developers in evaluating and improving NLP models for real-world applications

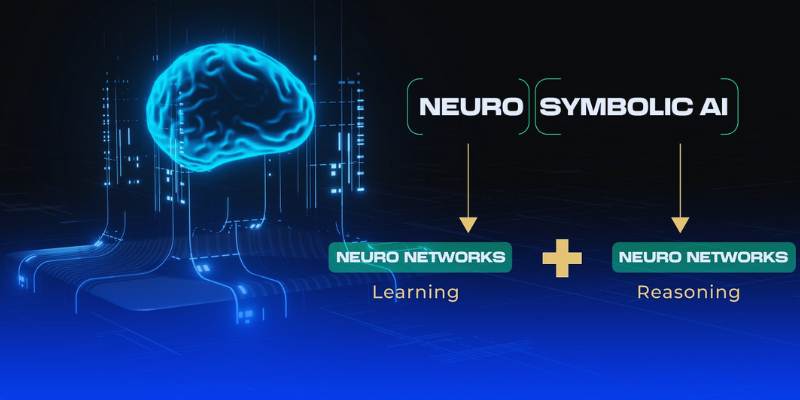

Neuro-symbolic AI blends neural learning and symbolic reasoning to create smarter, adaptable systems for a more efficient future

Understand how global AI adoption and regulation are shaping its future, balancing innovation with ethical considerations

Looking for the best open-source AI image generators in 2025? From Stable Diffusion to DeepFloyd IF, discover 5 free tools that turn text into stunning images